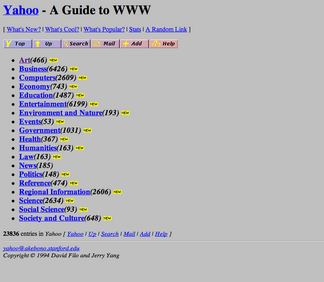

When Jerry and David’s Guide to the World Wide Web was launched in 1994, there were roughly 2,500 websites in the directory (including the very first website, created by Tim Berners-Lee at CERN). Roughly a year later, the Guide was renamed as Yahoo!, still promising to catalog all 25,000 sites on the web.

During these early years, Yahoo! was a directory, not a search tool. The company employed a team of editors that were tasked with finding and categorizing all of the sites on the Internet, resulting in a list of sites under one of several different categories.

Side note: For those of you not old enough to have seen the screenshot below, the numbers to the right of the category links are the number of websites that Yahoo! knew about in each category at the time. And no, there was no Google – more on that in a moment.

As more sites were added, Yahoo! added more editors to the team in order to keep pace.

This was a human scale problem.

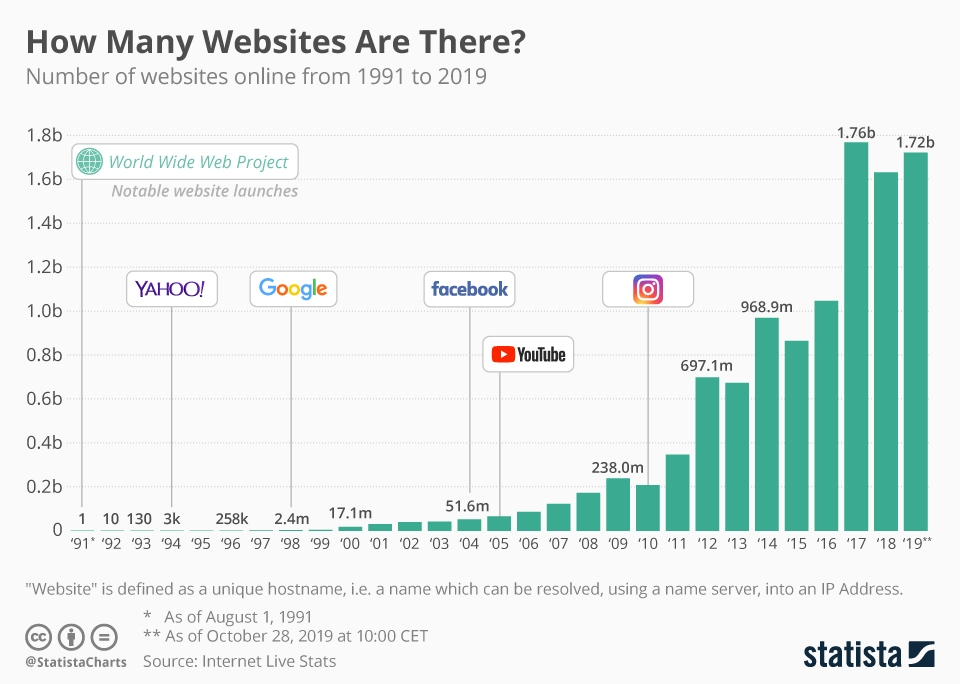

Over time, the number of sites on the Internet began to grow quite rapidly, exploding to over 1.7 billion sites in existence today. At increasing scale, Yahoo!’s ability to keep pace with its team of editors ended, ushering in the era of Google and the automated indexing that we all enjoy today.

As history shows, indexing and categorizing 1.7 billion sites is NOT a human scale problem.

At this point, you might be wondering how this relates to cybersecurity and the enterprise attack surface?

Cybersecurity has gone through a very similar transition. It wasn’t that long ago that the number of devices and apps on the enterprise network was very contained. Managed endpoints, internal applications running on servers in the data center, and a handful of network and infrastructure gear, such as routers, switches, DNS servers, and domain controllers made up the bulk of the network presence. And adversaries weren’t nearly as sophisticated as they are today, leveraging probably 1/10th of the attack vectors that they currently have in their arsenal.

Since the attack surface was relatively contained, this was deemed a human scale problem.

The solution? Trained security analysts were tasked with identifying and mitigating threats and vulnerabilities. As the attack surface increased, more analysts were added.

Is this starting to sound familiar?

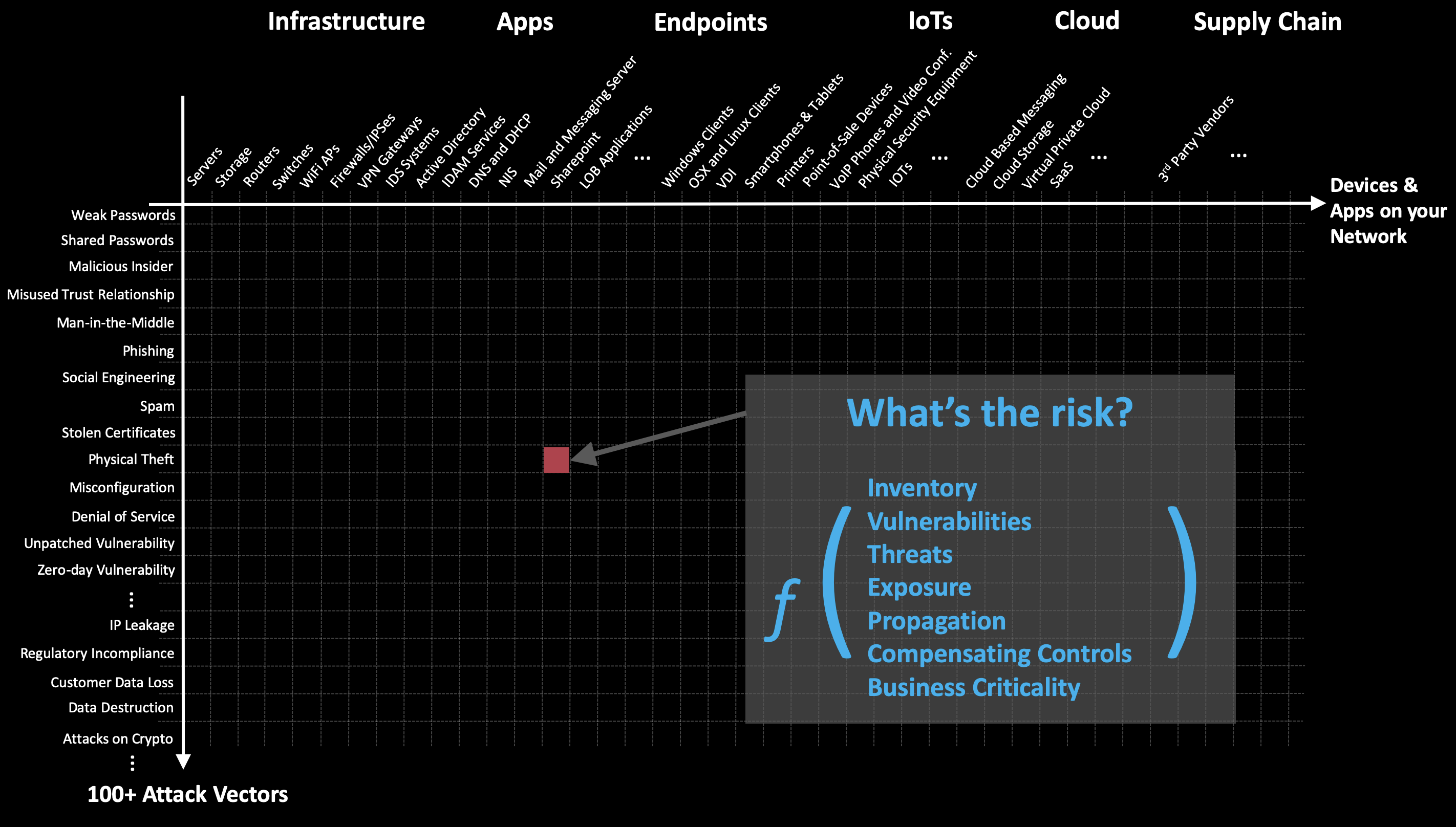

Flash forward a few short years and we’re faced with a very different picture, illustrated in the image below.

The X-axis represents all of the applications and devices you need to worry about. It includes the same internal servers, managed endpoints, and infrastructure components mentioned before. But it also includes unmanaged devices, corporate and personal cloud applications, connected IoT devices, 3rd party vendors, and much more. For many of you, it might not currently be possible to even enumerate your X-axis.

The Y-axis represents the various methods of attack that might be used against us, from simple things like weak or default passwords, to things like phishing, social engineering, known and zero-day vulnerabilities, etc.

Every point on this chart represents a potential area of compromise, and therefore, risk. The chart expands as your organization grows, as you adopt new technologies, and as the bad guys come up with additional attack vectors. For a mid-sized enterprise with 1000 employees, there are over 10 million time-varying signals that must be analyzed on an ongoing basis to predict breach risk. For larger enterprises, this number explodes to 100 billion or more signals.

This is NOT a human scale problem.

Organizations that want to improve their cybersecurity posture must recognize this challenge and use automated technologies to become more like Google and less like Yahoo!, lest they face a similar fate.