June 21, 2024

As the need for greater operational efficiency in cyber risk management has increased over the past ten years, the industry has seen a discernible shift from the conventional use of Common Vulnerability Scoring System (CVSS) metrics towards a more holistic approach that aligns with risk-driven prioritization.

This transformation reflects a growing recognition among organizations that assessing vulnerabilities solely based on their severity ratings may not adequately address cyber threats’ complex and dynamic nature. Instead, a risk-based approach prioritizes vulnerabilities according to their potential impact on the organization’s overall risk posture, considering factors such as threat intelligence, business context for asset criticality, and efficacies of security controls.

This article explores the history and rationale behind this paradigm shift, its implications for cybersecurity practices, and its benefits in effectively mitigating cyber risks.

Vulnerability Management (VM) was a complex process until the industry standardized a common terminology for vulnerabilities and established a process for disclosing them through the Common Vulnerabilities and Exposures (CVE) specification. CVE led to the first generation of Vulnerability Management using the Common Vulnerability Scoring System (CVSS) proposal. CVSS prioritizes vulnerabilities by considering the level of exposure, ease of exploitability, and severity of the consequences.

The assumption for CVSS has always been to assume the worst-case scenario and score vulnerabilities based on it. While CVSS v2 gained some adoption in the community, it has only been widely adopted by vendors and practitioners alike in the last decade. While it has gained adoption, it has had to adapt over time. The latest version, v3.1, includes more attributes for more precise differentiation and allows for modifying factors to account for vulnerabilities’ temporal and environmental aspects.

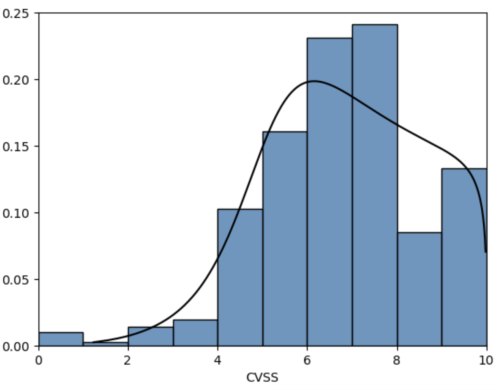

Although these have been provisioned for, adoption of these modifying factors has been minimal. Moreover, although the underlying attributes of vulnerabilities are meaningful, the use of these to develop a CVSS score, while disclosed, are black-box models and not explainable. These factors have left CVSS continuing as a first-generation worst-case scoring metric. As one might expect for such a metric, the scores are right-skewed, with what are most often low in severity, amounting to being medium or high, or even critical in the worst case.

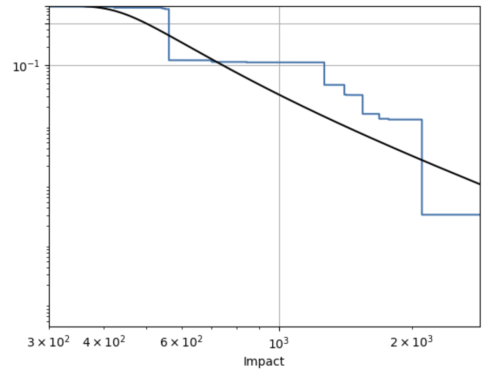

The figure below shows the histogram of CVSS scores (in blue) and a distribution fit (in black) that shows this right-skewness.

This right skew creates excessive work when using CVSS as a means for prioritization in VM. So, for instance, 95% of all published CVEs have a medium or higher CVSS score. With an ever-accelerating arrival of new vulnerabilities, typical enterprises are exposed to hundreds of millions of vulnerability instances. As a result, this approach has proven increasingly challenging and worsening operational efficiency.

Despite this operational challenge, CVSS has been extremely popular since vulnerabilities can be given a score collectively across all instances or occurrences.

This has lent itself well to defining policies, audit mechanisms, and regulations since it serves as a coarse and time-invariant metric to base policy definitions on. Therefore, most enterprise compliance policies have readily adopted CVSS or derivative scores as the basis for defining SLAs.

In the past decade, threat intel gathering and disclosures matured significantly and presented an opportunity to improve the approach to VM to drive towards increased operational efficiency. Not only did public databases like ExploitDB and open frameworks such as Metasploit framework gain momentum, but also several businesses, and an industry itself, emerged around the scouting of the internet and the dark web to put together a set of threat-related signals that have proven helpful in moving away from the conservative worst-case assumptions for vulnerabilities.

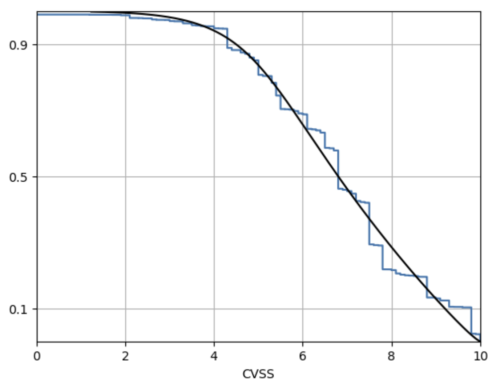

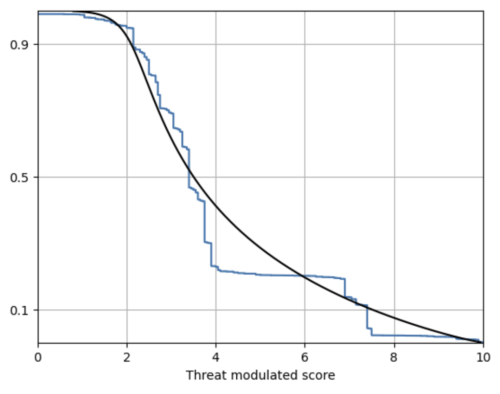

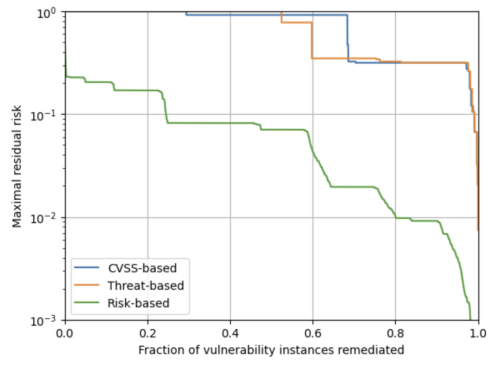

Although many threat-based approaches preferred categorizing the threat level into critical, high, medium and low buckets, one could derive a threat-modulated score in the same 0-10 range as the CVSS. One such score is shown in the complementary cumulative distribution below.

The modulation based on threat offers a better efficiency – around 5% of the vulnerabilities will fall in the critical range, which is close to the proportion of vulnerabilities that are exploited historically, and confining attention to the top 20% of the vulnerabilities will cast a wide net.

The threat-based score that has received the most attention is the Exploit-Prediction Scoring System (EPSS), which has taken a different approach—instead of a threat-modulated severity score, the EPSS is purely an exploit prediction score. It measures the probability that a vulnerability will be exploited in the wild in the following 30-day period from each day. While this may be a helpful signal, it is not a prioritization tool by itself.

While it is beneficial as an early warning signal of imminent threat for a vulnerability and, therefore, in “war-time” VM, these characteristics make it ill-suited for long-term use toward “peace-time” VM. It is an excellent threat-hunting metric – a means to separate the high-EPSS vs. the low-EPSS, but not a good VM metric – not as a means to prioritize within the high-EPSS or low-EPSS sets.

Even as a means for the former, direct evidence for imminent threat serves as close enough proxies and offers straightforward interpretability.

While adding the threat information allows for a more efficient way to operationalize VM, it presents some fundamental operational challenges.

Since the threat can vary over time, adopting threat-based prioritization implies being able to account for this time variance in operations. VM operations need to account for a revised score of priorities periodically – and if the desire is to react to threat changes in a granular manner, this period needs to be small, e.g., daily.

VM teams are not operationally used to this time variance of priorities. A related challenge also arises in defining compliance policies – remediation or mitigation SLAs for vulnerabilities now need to account for time-varying priorities, which presents challenges in how these requirements are communicated and tracked for auditing purposes.

Finally, although threat-based metrics are a step toward improved efficiencies, they are far from being the panacea for VM—even the instances of the top 20% vulnerabilities from a threat-modulated metric amount to a large enough influx of work for VM to be operationally unachievable as a complete remediation target.

This results in exceptions being approved for non-compliance and the resulting risk acceptance, defeating the goal of risk reduction.

The vast magnitude of vulnerability instances that will require addressing still minimizes the gains of prioritization. To further improve efficiencies, one needs to adapt to not only the temporal variations introduced by threat intel, but also spatial variations implicit in the assets for any enterprise. The figure below shows the proportion of assets in a typical enterprise whose impact, the worst-case cost of compromise of the asset, exceeds the value on the X-axis (in blue) and a simple fit power-law distribution for it (in black).

What is evident from this picture is that the fraction of assets whose compromise is material is tiny. A risk-driven approach of scoring vulnerabilities distinguishes instances of the vulnerabilities that expose such material assets by incorporating the asset criticality into the severity of compromise, bubbling up such vulnerability instances in terms of their risk scores.

A few things are noteworthy here:

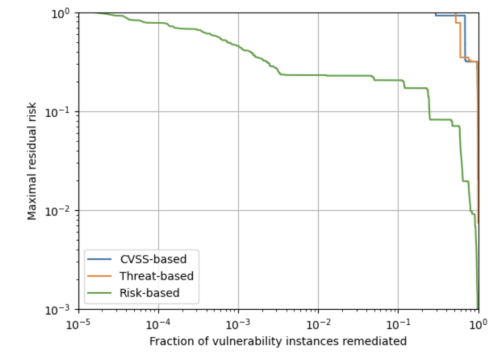

Note that although it appears that the risk-based approach gets a “head start” with a non-zero risk with no remediations in the above plot.

This is purely due to the focus on remediating a small fraction of vulnerability instances – a logarithmic scale shows that a massive dent in the risk is feasible with just the remediation of the top 0.3% of vulnerability instances.

Apart from the alignment of operational prioritization with risk reduction achievable and the significant gains in efficiency unlocked by the risk-driven approach, there are also a few other advantages.

The CVSS approach is robust compared to the threat-based approach, giving confidence in its reliability. However, with the emergence of AI as a tool for exploitation, the assumption that actively exploited vulnerabilities are only a small fraction of all vulnerabilities is on shaky ground.

This will likely make threat-based approaches less appealing than the conservative approach of CVSS. The operational inefficiencies of a CVSS-like approach will force leaders to adopt a granular risk-based approach, which is a robust strategy that avoids reliance on externalities to drive efficiencies.

The risk-based approach also presents multiple options for security teams – where remediation is not immediately feasible, deployment of security controls or enforcement of hardening of systems, or running awareness or training campaigns are all automatically factored into the risk scoring and allow for buying more time for remediation while keeping risk in check.

This is ultimately a black box in the other approaches, and one can get a false sense of security by adopting a control that might not be effective in a particular situation. When buying more time for security teams while denying any time for adversaries is critical, this flexibility of options is extremely beneficial!

Whereas the CVSS and threat-based approaches are well-defined and operational for software vulnerabilities (CVEs), the risk-based approach has no constraints. It readily applies to a broader class of vulnerabilities affecting a wider set of assets and their components – be it source code vulnerabilities in custom applications, configuration vulnerabilities in cloud assets, access control vulnerabilities in user management, or credential vulnerabilities for a user. This provides a unified way of understanding risk across the enterprise and prioritizing on this basis, allowing a rigorous and data-driven manner of prioritizing across functionalities, which is primarily based on guesstimation with other approaches.

Balbix enables best-in-class risk-based vulnerability management for global 2000 accounts. Sign up for a demo today!