August 13, 2024

In the rapidly evolving world of cybersecurity, staying ahead of threats requires more than just reactive measures—it demands foresight and precision. Enter the 4th generation of vulnerability prioritization, a paradigm shift that’s redefining how organizations approach their exposure management strategies. But what exactly is this new wave of prioritization, and why is it generating so much buzz in the cybersecurity community?

In this blog, we’ll dive into the principles of 4th generation vulnerability (and exposure) prioritization, exploring how it differs from previous methods, the cutting-edge technologies that power it, and why it might be the key to bolstering your organization’s defenses in an increasingly complex threat landscape. Whether you’re a seasoned cybersecurity professional or just looking to understand the latest trends, this discussion will unpack the reasons behind the growing excitement around this innovative approach.

For those who came in late, Vulnerability Prioritization is the process of identifying and ranking software or system vulnerabilities based on their potential risk to an organization. The goal is to focus remediation efforts on the most critical vulnerabilities that could lead to significant damage if exploited.

The concept of vulnerability prioritization has evolved over time through different generations, each reflecting advancements in technology, threat landscapes, and the methodologies used to assess and manage vulnerabilities. Each generation builds on the previous one, adding more layers of context, intelligence, and automation to help organizations manage vulnerabilities and exposures more effectively and efficiently.

Here’s a brief overview of the previous generations:

Ranking Vulnerabilities Based on Severity Alone

The first generation of vulnerability management focused primarily on identifying and cataloging vulnerabilities. This approach was largely reactive, relying on basic tools and scanners to detect known vulnerabilities in systems and networks. The process was often manual, with security teams running scans once every few months, and then prioritizing vulnerabilities based on severity ratings provided by scanners before applying patches based on some acceptable rating criteria.

1st generation vulnerability prioritization relied heavily on the Common Vulnerability Scoring System (CVSS). CVSS provides a numerical score (0-10) that represents the severity of a vulnerability, considering factors like exploitability and impact on confidentiality, integrity, and availability.

While CVSS is useful for understanding vulnerability severity, it assumes worst-case scenarios and does not account for an organization’s specific context. This approach often results in a large number of what appear to be high and critical severity vulnerabilities that seemingly require immediate remediation, overwhelming security teams, when in reality only a fraction are actually exploitable.

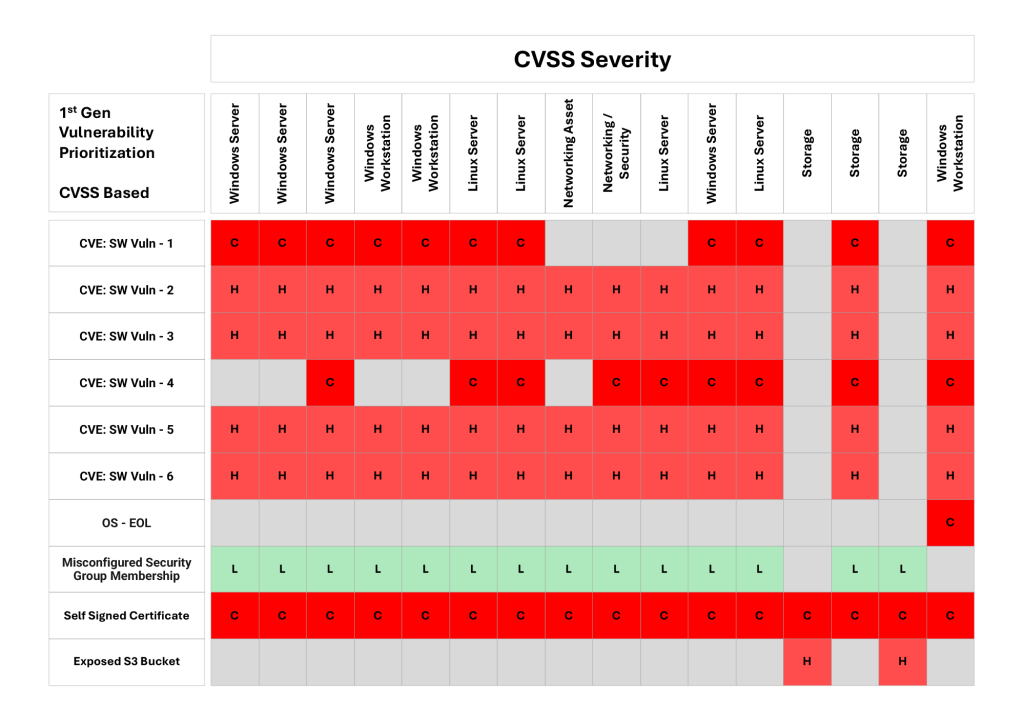

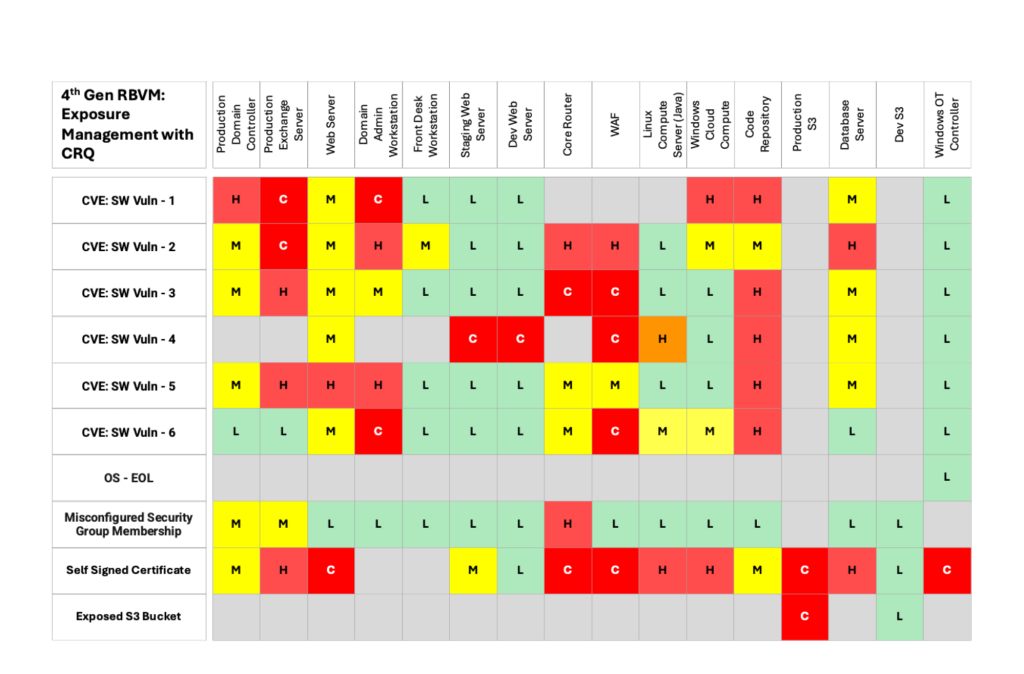

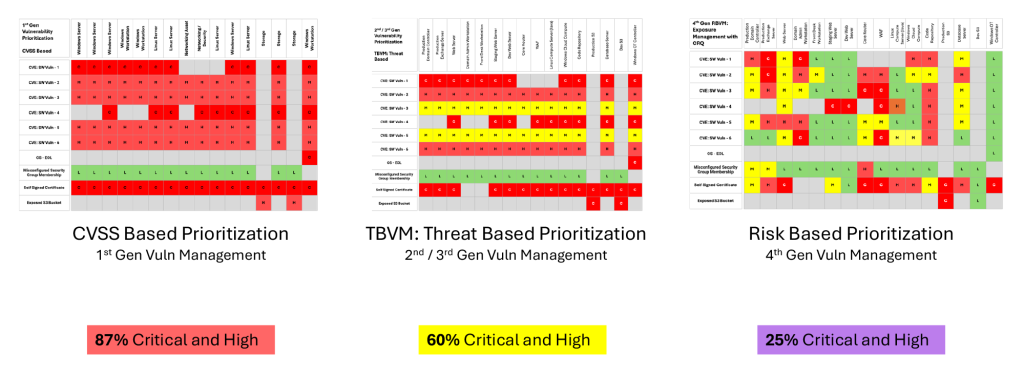

Table 1 shows the vulnerabilities (not just CVEs but misconfigurations, end-of-life OS and other exposure types) in the right-hand column for each of the assets listed in the top row. The CVSS score (L=low, H=high, C=critical) is then shown for each unique vulnerability (each row). In this typical example, not only are the CVSS scores identical for each asset but nearly all (87%) are classified as critical or high.

CVSS-based prioritization makes several key assumptions that limit its accuracy:

While CVSS v3.1 introduced configurations for temporal and environmental factors, manually adjusting these for millions of vulnerability instances proved impractical.

Adding Threat Information

The second generation introduced threat context into vulnerability management. By incorporating external threat intelligence, organizations could focus on software vulnerabilities that were most likely to be exploited, thus making their vulnerability management processes more dynamic and responsive to the evolving threat landscape.

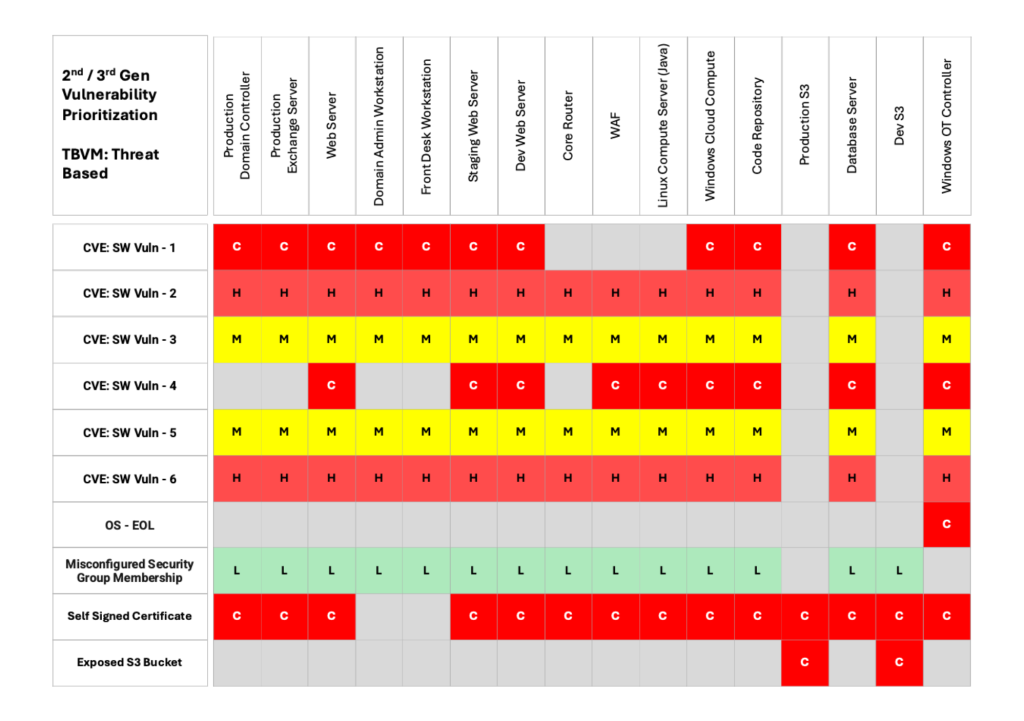

Threat-Based Vulnerability Management (TBVM) prioritizes CVEs based on the threat level, reducing the number of vulnerabilities marked high or severe compared to severity-based models.

Table 2 shows how adding threat information like risk scores and exploit prediction refines the vulnerability scores so that only 60% of vulnerabilities are scored as high or critical vs 87% under the risk-based model.

Threat-Based Vulnerability Prioritization evaluates factors such as:

Adding More Context and EPSS

Third generation vulnerability prioritization more context into vulnerability management. Instead of just focusing on the vulnerabilities themselves, this approach began to consider factors like asset criticality, exploitability, and potential business impact. Vulnerability management tools became more sophisticated, allowing organizations to prioritize vulnerabilities based on the specific risks they posed to critical assets. This generation marked the shift from treating all vulnerabilities equally to understanding which vulnerabilities could cause the most harm if exploited.

The third generation of vulnerability prioritization also incorporates the Exploit Prediction Scoring System (EPSS), which predicts the likelihood of a vulnerability being exploited based on historical data and statistical models.

EPSS is less relevant when a given vulnerability is already known to be exploited in the wild, as it’s specifically designed to be an early-warning system for future exploitation. Furthermore, non-software vulnerabilities can not be prioritized because the EPSS prediction model does not focus on them.

Incorporating Severity, Threat Levels, Exploitability, Security Controls, and Business Impact

The 4th generation of vulnerability prioritization, addresses the shortcomings of previous models by integrating comprehensive risk assessment into the prioritization process and extends coverage to all types of exposures. This generation of prioritization leverages artificial intelligence, and big data analytics to predict which specific vulnerability instances and exposures are most likely to be successfully exploited against the enterprise in the future, and are material to the organization, even before they become active threats.

Risk-based prioritization evaluates exposures based on a combination of 5 factors, including severity, threat level, exploitability, business impact, and the effectiveness of existing security controls. This ensures that exposures are prioritized not only by their severity but also by their specific risk context within the organization.

Risk-based prioritization shown in Table 3 represents, in effect, the first generation of exposure prioritization. The core factors added on top of exposure severity and threat levels include:

Exploitability: Exploitability measures how available an asset and its vulnerabilities are to adversaries for exploitation, with external-facing assets being more accessible. This assessment involves determining the ease with which vulnerabilities can be discovered and utilized by attackers.

Controls Analysis: Controls analysis involves evaluating the effectiveness and implementation of security controls to protect an organization’s assets from potential threats and vulnerabilities against all Techniques, Tactics, and Procedures (TTPs) used by attackers. This analysis assesses how well these controls can prevent, detect, and respond to attack vectors. It includes reviewing technical controls like firewalls, intrusion detection systems, and encryption, as well as administrative controls such as policies, procedures, and training programs. Controls analysis helps identify gaps in the current security posture, ensuring that defenses are robust enough to mitigate identified risks and enhance the overall resilience of the organization against cyber threats.

Business Impact: Impact refers to the expected loss the enterprise will incur in a successful breach of an asset or application.

One key point to note that 4th generation prioritization focuses on individual instances of CVEs and other exposures, since different instances of the same CVE or misconfiguration may have different levels of exploitability, control effectiveness and business impact.

4th generation emphasizes automation, reducing the need for manual intervention and allowing for real-time prioritization that adapts as new information becomes available. It’s a proactive approach that aims to stay ahead of threats by anticipating and mitigating risks before they can be exploited.

AI significantly enhances vulnerability prioritization by bringing advanced capabilities to the process, enabling more precise, efficient, and proactive security management. AI enhances vulnerability prioritization by predicting likely threats, dynamically adjusting risk scores, automating management tasks, providing contextual understanding, continuously learning, correlating threats, optimizing resources, and detecting anomalies.

Overall, 4th generation vulnerability prioritization(or simply exposure prioritization) transforms vulnerability prioritization from a largely reactive process into a proactive and predictive one, enabling organizations to stay ahead of attackers by focusing on the most relevant and impactful vulnerabilities. This leads to a more secure and resilient IT environment, with reduced risk of breaches and better protection of critical assets.

4th generation, i.e., risk-based prioritization allows organizations to identify and address the most risky exposures, not just high-severity or high-threat CVEs. This approach provides a thorough assessment of exposures across all assets in the enterprise. By implementing risk-based prioritization, only 25% of exposures are classified as critical or high risk, a reduction of 60% from severity-based prioritization. These are the exposures that have the highest impact on the organization and should be remediated first.

The Balbix exposure management platform implements 4th generation vulnerability prioritization. Telemetry data from your cybersecurity tools is deduplicated, correlated and then analyzed by Balbix’s AI models to create a unified exposure and risk model. Vulnerability instances and findings (exposures) are prioritized based on severity, threats, exploitability or accessibility, the effectiveness of security controls and business criticality and risk is quantified in dollars (or other monetary units). This prioritization information is fed into a “next best steps” computation that uses Shapely Econometric Models to calculate enterprise-wide priority for mitigation and remediation actions to burn down risk to acceptable levels. Next Best Steps are then subdivided into projects, tickets and automated orchestration steps and as issues are fixed, the system automatically performs validation and the cycle continues.

Request a demo to determine the effectiveness of your risk prioritization program.